Computing revolutions are surprisingly rare. Despite the extraordinary technological progress that separates the first general-purpose digital computer—1945’s ENIAC—from the smartphone in your pocket, both machines actually work the same fundamental way: by boiling down every task into a simple mathematical system of ones and zeros. For decades, so did every other computing device on the planet.

Then there are quantum computers—the first ground-up reimagining of how computing works since it was invented. Quantum is not about processing ones and zeros faster. Instead, it runs on qubits—more on those later—and embraces advanced physics to take computation places it’s never been before. The results could one day have a profound impact on medicine, energy, finance, and beyond—maybe, perhaps not soon, and only if the sector’s greatest expectations play out.

The field’s origins trace to a 1981 conference near Boston cohosted by MIT and IBM called Physics of Computation. There, legendary physicist Richard Feynman proposed building a computer based on the principles of quantum mechanics, pioneered in the early 20th century by Max Planck, Albert Einstein, and Niels Bohr, among others. By the century’s end—following seminal research at MIT, IBM, and elsewhere, including Caltech and Bell Labs—tech giants and startups alike joined the effort. It remains one of the industry’s longest slogs, and much of the work lies ahead.

Some of quantum’s biggest news has arrived in recent months and involves advances on multiple fronts. In December, after more than a decade of development, Google unveiled Willow, a Post-it-size quantum processor that its lead developer, Hartmut Neven, described as “a major step.” In February, Microsoft debuted the Majorana 1 chip—“a transformative leap,” according to the company (though some quantum experts have questioned its claims). A week later, Amazon introduced a prototype of its own quantum processor, called Ocelot, deeming the experimental chip “a breakthrough.” And in March, after one of D-Wave’s machines performed a simulation of magnetic materials that would have been impossible on a supercomputer, CEO Alan Baratz declared his company had attained the sector’s “holy grail.” No wonder the tech industry—whose interest in quantum computing has waxed and waned over its decades-long gestation—is newly tantalized.

Bluster aside, none of these developments has led to a commercial quantum computer that performs the kinds of world-changing feats the field’s biggest advocates anticipate. More twists lie ahead before the field reaches maturity—if it ever does—let alone widespread adoption. A 2024 McKinsey study of the sector reflects this uncertainty, projecting that the quantum computing market could grow from between $8 billion and $15 billion this year to between $28 billion and $72 billion by 2035.

Whatever comes next isn’t likely to be boring. So here’s a brief overview on computing’s next big thing, which many have heard of but few of us fully understand.

1. Let’s start with “why.” What exactly can quantum computers do that today’s supercomputers can’t?

We’re talking about a computer that could, theoretically, unleash near-boundless opportunity in fields that benefit from complex simulations and what-if explorations. The goal of quantum computer designers isn’t just to beat supercomputers, but to enable tasks that aren’t even possible today.

For example, Google says its new Willow quantum chip took five minutes to complete a computational benchmark that the U.S. Department of Energy’s Frontier supercomputer would have needed 10 septillion years to finish. That’s a one followed by 25 zeros. By that math, even a supercomputer that was a trillion times faster than Frontier—currently the world’s second-fastest supercomputer—would require 10 trillion years to complete the benchmark.

Now, a lab-run benchmark is not the same as a world-changing feat. But Google’s test results hint at what quantum might be capable of accomplishing. Like other recent milestones, it’s tangible proof that the technology’s unprecedented potential is more than theoretical.

2. Dare I ask how quantum computers work? Do I even need to know?

As with a personal computer, you probably don’t, unless you’re planning to build one. Simply using one—which you probably won’t do anytime soon—also won’t require familiarity with the gnarly details.

The scientific underpinnings are fascinating, though. Very quickly: They’re based on aspects of quantum physics that can sound weird to us mere mortals. Entanglement, for example, describes the quantum connection between two or more particles or systems even if they’re light-years apart. And superposition states that a quantum system can exist in a state of multiple possibilities, all at the same time.

In quantum computers, the quantum bit—or qubit—takes advantage of these properties. While a conventional bit is binary, containing either a one or a zero, a qubit can have a state of one, zero, or anywhere in between. Entangled qubits work in concert, allowing algorithms to manipulate multiple qubits so they affect each other and initiate a vast, cascading series of calculations. These mind-bending capabilities are the basis of quantum computing’s power.

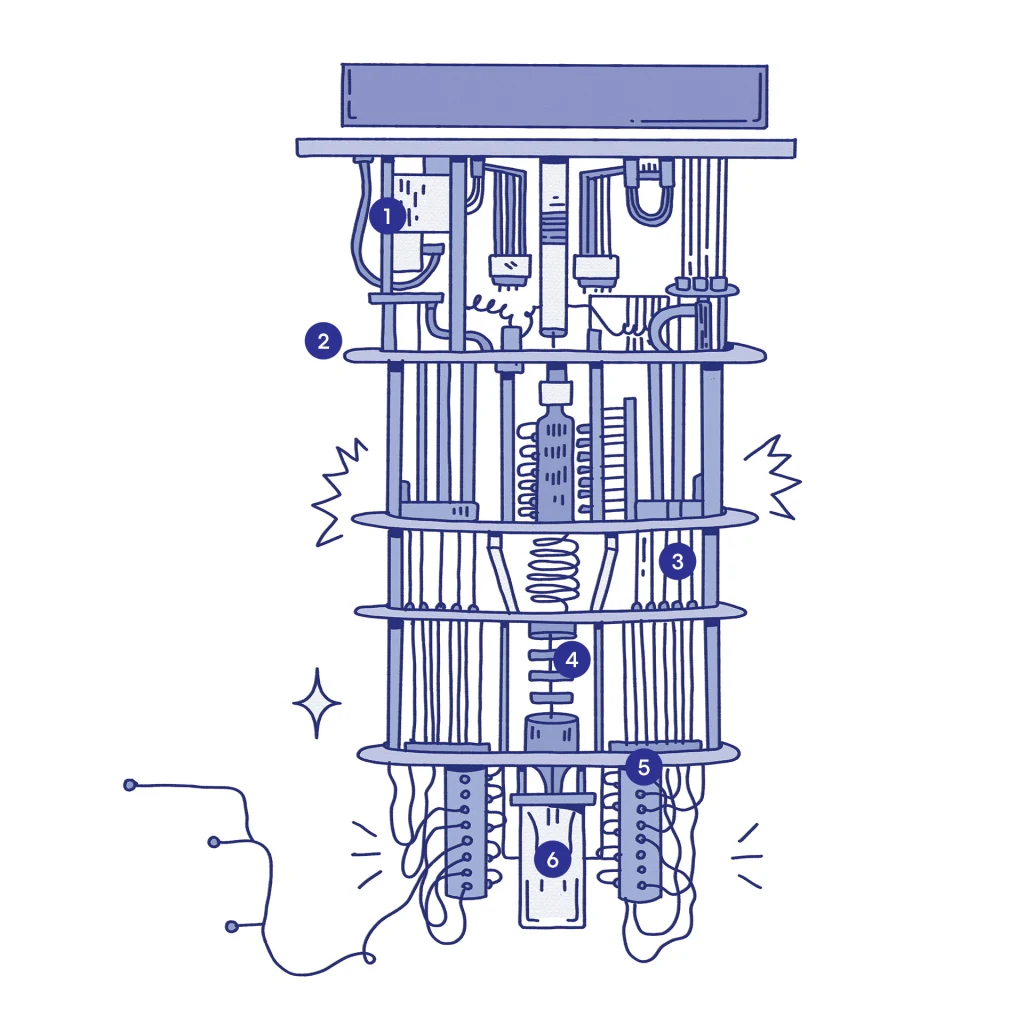

But taming quantum physics is one of the most daunting tasks scientists have ever taken on. To maintain their quantum state, many qubits must be cooled to just a skosh above absolute zero. This requirement leads to the unique look of the machines, which resemble steampunk chandeliers. A stack of suspended discs—often made of gold-plated copper—bring the temperature progressively down, with snakes of cables shuttling data in and out of the qubits.

3. I’m already lost.

You’re not alone! One metaphor to explain quantum involves magical pennies. Imagine you’re tasked with laying out every possible heads-tails combination for the outcome of a 100-coin toss. With ordinary pennies, you’d need more than a nonillion coins—that’s a one followed by 30 zeros. Now imagine 100 magical pennies that can represent all the different combinations at the same time, covering every possible outcome. Much more efficient.

Back in the real world, many tasks are as complex as a 100-coin toss, and they’d quickly max out the ones and zeros of non-quantum machines, also known as classical computers. By going beyond the binary, qubits hold the promise of turning these tasks into magical-penny projects. Otherwise impossible computing work would become feasible.

4. That helps—but give me some examples.

Quantum computing holds particular promise in biology and materials science. Eventually, a sufficiently advanced quantum machine may be able to model molecular structures with unprecedented precision, potentially transforming everything from novel drug discovery in pharmaceuticals to the development of new kinds of batteries that would lead to cheaper EVs with greater range. Financial analysis is another prime application: Someday, a quantum computer might be better at data-intensive undertakings—portfolio optimization, securities lending, risk management, identifying arbitrage opportunities—than any classical computer.

Today’s commercially available quantum computers can’t pull off such extraordinary accomplishments. Still, some companies are already dabbling with the technology. For instance, Maryland quantum startup IonQ established a partnership with Airbus in 2022 to experiment with optimizing the process of loading cargo in a range of shapes, sizes, and weights onto aircraft with varying capacities—the kind of massive math problem that quantum is designed to solve.

5. Impressive! So why aren’t quantum computers everywhere already? Didn’t you say the idea dates to 1981?

Reliability remains an issue. Even once you’ve cooled a quantum machine to near absolute zero—no small undertaking—even the slightest temperature fluctuation or electromagnetic interference can cause qubits to perform erratically.

To mitigate, quantum designers deploy error-correction tech that pools these physical qubits into fewer, more robust “logical” qubits. Increasing the number of physical qubits that can be combined into logical ones is key to the industry’s ambitions. The more reliable logical qubits there are in its processor, the stronger a quantum computer’s ability to tackle sophisticated projects.

The new Majorana 1 chip, for one, holds the potential to scale up to “a million qubits in the palm of your hand,” says Krysta Svore, technical fellow for advanced quantum development at Microsoft. With just eight physical qubits, the prototype—based on a property called topological superconductivity—is a start. “You need around 100 [logical qubits] for problems in science to outperform the classical results,” Svore says. “At around 1,000 reliable logical qubits, you’ll see industrial value in chemistry.” Microsoft hopes to reach that milestone in years, not decades.

6. Who else is taking part in the race to make quantum real?

More companies than you might guess. A few are household names, such as IBM, which helped launch the whole field at that 1981 conference and deployed its first commercial quantum machine, the IBM Q System One, in 2019. Among its contributions to the field is Qiskit, an open-source software platform for writing algorithms that can be run on quantum computers—not just IBM ones, but others as well.

The overwhelming majority of players, however, are small and focused on quantum. McKinsey’s 2024 report counted 261 such startups that had received a total of $6.7 billion in investment—104 in the U.S. and Canada, and 24 in the U.K., another hub. But McKinsey says its list is not exhaustive and that it’s particularly difficult to determine how much activity is going on in China, where it identified just 10 quantum computing startups.

Some of these companies are developing their own quantum computers from the ground up, often based on novel approaches. In January, for example, Toronto-based Xanadu announced Aurora, a 12-qubit machine built around photonic, or light-based, qubits rather than superconducting ones, allowing it to run at room temperature. Others are carving off specific aspects of the technology and looking for opportunities to collaborate with hardware makers. They include U.K.-based Phasecraft, which is focused on optimizing quantum algorithms and is partnering with Google and IBM, among others.

Any quantum startup would be happy to become the Google, Amazon, or Microsoft of this new computing form—though Google, Amazon, and Microsoft share the same aim and can pour their colossal resources into achieving it. Upstarts and behemoths alike are still in the early stages of a long journey to full commercialization of the technology. Figuring out which ones might dominate a few years from now is as much of a crapshoot as identifying who the internet economy’s ultimate winners would have been in the mid-’90s.

7. Once quantum computers are humming, will classical computing go away?

Highly unlikely. From word processing to generative AI, classical computers excel at general-purpose work outside quantum computing’s domain. Few companies will buy their own quantum computers, which will remain complex and costly. Instead, businesses will access them as cloud services from providers like Amazon Web Services, Google Cloud, and Microsoft Azure, combining quantum machines with on-demand classical computers to accomplish work that neither could achieve on its own. Quantum’s ability to explore many data scenarios in parallel may make it an efficient way to train the AI algorithms that run on classical machines. “We envision a future of computing that’s heterogeneous,” says Jay Gambetta, VP of quantum at IBM.

8. Sounds like a tool that could do a lot of good—but also harm. What are the anticipated risks?

The one that concerns people the most is a doozy. Most internet data is secured via encryption techniques that date to the 1970s—and which remain impervious to decoding by classical computers. At some point, however, a quantum machine will likely be able to do so, and quickly. It’s up to the industry to start girding itself now so that businesses and individuals are protected when this eventuality comes.

Quantum-resistant, or quantum-secure, encryption—sturdy cryptographic schemes that withstand quantum-assisted cracking—is possible today: Apple has already engineered its iMessage app to be quantum-resistant. Samsung has done the same with its newest backup and syncing software. But implementing such tech globally, across all industries, will prove a huge logistical challenge. “You have to start that transition now,” says IBM’s Gambetta.

So far, the U.S. Commerce Department’s National Institute of Standards and Technology (NIST) has played a critical role in bolstering cryptographic standards for the quantum era. How well that effort will survive the Trump administration’s sweeping shrinkage of federal resources—which has already resulted in layoffs at NIST—is unclear. “I’m a little less optimistic about our ability to do anything that requires any coordination,” says Eli Levenson-Falk, an associate professor and head of a quantum lab at the University of Southern California.

9. Is there a chance that quantum computing will never amount to much?

Most experts are at least guardedly hopeful that it will live up to their expectations. But yes, the doubters exist. Some argue that scaling up the technology to a point where it’s practical could prove impossible. Others say that even if it does work, it will fall short of delivering the expected epoch-shifting advantages over classical computers.

Even before you get to outright pessimism, cold, hard reality could dash the most extravagant expectations for quantum as a business. In January, at the CES tech conference in Las Vegas, Nvidia CEO Jensen Huang said he thought “very useful” quantum machines were likely 20 years away. Huang is no hater: Nvidia is actively researching the technology, focused on how supercomputers using the company’s GPU chips might augment quantum computers, and vice versa. Nevertheless, his cautious prognostication prompted Wall Street to punish the stocks of publicly held quantum companies D-Wave, IonQ, and Rigetti Computing. If enough American investors grow antsy about when they’ll start seeing returns on quantum, the tech’s future in the U.S. could rapidly dim.

10. Twenty years! That sounds like forever.

Even the optimists can’t be all that specific about when quantum computers will be solving significant problems for commercial users. Levenson-Falk believes it could happen in the next “one to 10 years,” and says the uncertainty has less to do with the hardware being ready than with identifying its most promising applications.

Consider the historical arc of another field that launched at a school-sponsored gathering, this time at a Dartmouth workshop in the summer of 1956: artificial intelligence. More than 50 years elapsed before the foundational breakthroughs that paved the way for generative AI; ChatGPT didn’t come along until another decade after that. And we’re only just beginning to discover meaningful everyday applications for the technology. That quantum computing is proving to be a similarly epic undertaking shouldn’t shock anyone—even if the end of the beginning is not yet in sight.

The Strange Beauty of a Quantum Machine

Some, like this IBM Q System One, look like chandeliers. They’re colder than outer space.

1. Pulse tube coolers

As signals move toward the computer’s core, things get chillier. Here begins the first stage of cooling, via the thermodynamic heat transfer of helium gas, to 4 Kelvin.

2. Thermal shields

These layered plates act as heat blockers, isolating each successive, increasingly cold layer from external heat.

3. Coaxial cables

These lines carry microwave pulses down to read the quantum chip’s amplified output then back out. Their gold plating helps reduce energy loss.

4. Mixing chamber

The mingling of two helium isotopes—gases in the atmosphere but liquids here—provides the final blast of cooling power, bringing the temperature of the chip to 15 millikelvin.

5. Quantum amplifiers

These components amplify the chip’s weak quantum signals of its qubits’ states while minimizing noise.

6. Quantum processor

About the size of a stamp, this chip houses the qubits where computing magic occurs.